Nick Sheero

Feb 7, 2024

At TensorSense, we're making multimodal LLMs reason about what humans do on cameras, analyzing things like behavior, conditions, and skills.

One of the key breakthroughs we've achieved is in predicting specific mental states from webcam footage, such as deceitfulness, concentration, attention. This journey has led us to game-changing insights about facial expressions and emotions: turns out that a lot of common sense about them might need an update.

We're excited to share these discoveries to help researchers and developers refine their work with emotion data. It's all about building smarter, more accurate systems that truly get the human element.

Wait, haven't engineers solved emotion recognition years ago?

Despite the buzz around emotion recognition technology, evidenced by over 523 public repositories tagged with emotion-recognition on GitHub, it's surprising to see its slow adoption among major players in the industry.

Take a look at the trend: post-COVID, as remote work became the norm and Zoom replaced face-to-face interactions, interest in emotion recognition tech spiked. Yet, this enthusiasm seemed to wane shortly after. Why the sudden dip?

A TechCrunch article sheds light on this, quoting Stephen Bonner, a notable figure from the UK's privacy watchdog:

The U.K.’s privacy watchdog has warned against use of so-called “emotion analysis” technologies for anything more serious than kids’ party games, saying there’s a discrimination risk attached to applying “immature” biometric tech that makes pseudoscientific claims about being able to recognize people’s emotions using AI to interpret biometric data inputs.

Bonner's skepticism stems from a fundamental issue: a widespread misunderstanding among AI researchers about the nature of emotions and how to accurately measure them. The prevailing method, heavily reliant on facial recognition, assumes that emotions can be directly inferred from facial expressions. This oversimplified approach is not only scientifically dubious but also proves to be practically ineffective.

In reality, emotional expression is diverse and nuanced, far beyond simple, generic facial cues

Let's dive into the world of how emotions, specifically surprise, are depicted across various mediums. I embarked on a little experiment to understand this better. Here's what I did:

I started with MidJourney v6, where I generated an image of a person looking surprised. The result? A classic wide-eyed, open-mouthed expression that's often associated with shock or astonishment.

Next, I found a moment in one of my Zoom calls where I was genuinely surprised. The expression here was quite different — more nuanced, perhaps a subtle raise of the eyebrows or a slight parting of the lips.

I then looked at how surprise is portrayed in modern drama, finding a scene from the show Euphoria that captures this emotion.

Lastly, I turned to Google Search to see the collective internet's take on a surprised face, which unsurprisingly mirrored the exaggerated expressions found in MidJourney's output.

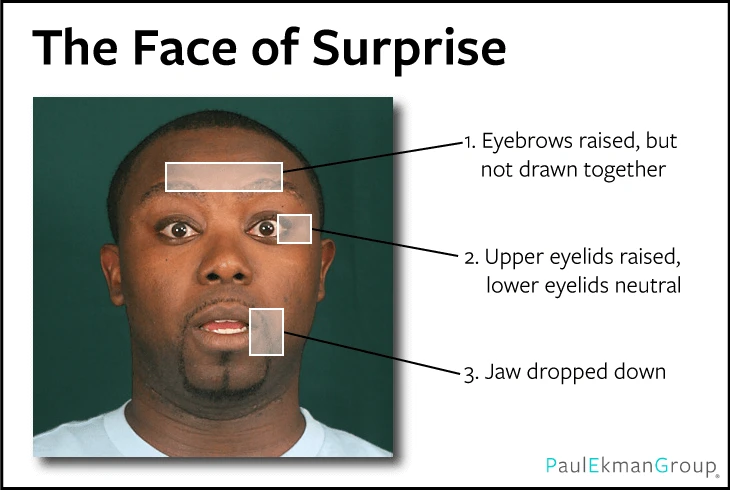

It's not surprising (pun intended!) to find similarities between Google and MidJourney's portrayals: the consistency in the dramatized expressions of surprise we see in both Google and MidJourney's outputs can be traced back to a widely recognized system of universal facial expressions.

This framework, established decades ago and embraced by psychologists around the globe, outlines a set of facial expressions that are commonly associated with specific emotions, including surprise. These expressions have been deeply ingrained in both academic study and popular culture, influencing how emotions are depicted and recognized across various platforms and media.

However, the real kicker is understanding why the universal facial expressions system framed these platforms to such specific expressions when actual human reactions to surprise can be much more varied and subtle.

Where did these universal emotional facial expressions come from?

Let's explore the rich history and evolution of how emotions and facial expressions have been represented, leading up to modern understandings shaped by pioneers like Paul Ekman, the inventor of the concept of universal facial expressions.

The practice of using facial expressions to convey emotions dates back centuries, with theaters employing masks to portray characters in specific emotional states. Starting from the basic dichotomy of tragedy and comedy masks—symbolizing sadness and happiness respectively—theater evolved to introduce more nuanced expressions through del-arte masks, depicting complex archetypes.

Ancient Greek theatrical masks

Fast forward to 1970’s, Paul Ekman's groundbreaking work was the next big thing in facial expressions.

In 1971, Ekman's research paper "Constants across cultures in the face and emotion" laid the foundation for a new wave of studies linking facial expressions directly to emotions. His work, especially the Facial Action Coding System (FACS), introduced a methodical way to quantify facial expressions using Action Units, each corresponding to a specific movement of facial muscles.

Ekman's contributions are undeniably groundbreaking, promoting a scientific approach to understanding emotions that moved beyond theatrical representations.

However, it's important to note that his methods were developed in the 1970s, an era without the high-tech tools we use today, such as fMRI, BCI, or ECG. These weren't available to examine the body and quantitatively measure emotions as they occurred internally.

Ekman ventured into the realm of facial expressions as a gateway to understanding emotions, relying heavily on subjective reports by asking individuals how they felt or interpreting emotions from photographs. This approach was groundbreaking for its time, yet it's worth noting that the scientific rigor we adhere to today has evolved considerably.

In Ekman's time, the field of psychology wasn't as tightly regulated as it is today. This era was more exploratory in nature, where the boundaries of research were broader, allowing for a range of experimental approaches. This freedom, while sparking innovation, also meant that some of Ekman's methodologies later faced scrutiny under the more rigorous scientific standards that evolved.

The modern approach: facial expressions are not emotions, and emotions are not facial expressions

Let's break down the evolution of emotion research and its implications for modern science and technology:

The study of emotions has undergone a significant transformation over the years. Initially, the domain of psychologists in the early 20th century, emotion research has now expanded into the realm of neurobiology, offering deeper insights into how emotions are processed in the brain and body.

Paul Ekman, a pioneer in this field, identified six basic emotions and later expanded his list to include secondary emotions. His work laid the groundwork for understanding the universality and specificity of emotional expressions.

However, as technology and research methods have advanced, new findings challenge some of the foundational aspects of Ekman's theories. Today's scientists, equipped with sophisticated tools for analyzing brain and physiological data, such as fMRI and BCI, helping to understand what really happens inside the human consciousness, have made several critical discoveries:

Ekman's emotion recognition approach may not hold up under the scrutiny of real-world data: “In conclusion, our study shows a poor efficiency of Ekman’s model in explaining emotional situations of daily life.”

The assumption that specific facial expressions directly correspond to distinct emotions is being questioned. For example, the idea that happiness can be unequivocally inferred from a smile, or anger from a scowl, has been challenged. Oversimplification of emotional expressions doesn’t work. Companies and researchers might be flexing their tech, claiming they've cracked the emotion code. But when the data steps in, it's a whole different story.

Emotion is recognized as a complex phenomenon, influenced by both biological and sociological factors, with many unanswered questions remaining, such as the total number of emotions, their mechanisms, and how they are experienced and expressed by different individuals.

So, to summarize everything above: never use software for emotional recognition that relies on the fact that sometimes people smile when happy, and frown when sad.

A man participating in a BCI-powered study

Okay, so what do facial expressions represent? And how can I use them in my AI research?

A facial expression is a whole symphony of anatomy: 30 muscles, pupils, eyeballs, skin, fat, connecting tissues, and bones getting in on the act. It is influenced by a complex interplay of factors:

Physiological Factors:

Neurotransmitter Influence: The presence and balance of neurotransmitters in the brain significantly impact emotional states and decision-making processes.

Amygdala Activation: This key brain structure plays a pivotal role in processing emotions. When the amygdala is activated, it can intensify emotional responses, directly influencing the expressiveness and subtlety of facial expressions.

Reflexive Responses: Automatic reactions such as blinking or yawning that can influence facial expressions.

Health and Neural Influences: Conditions like sleep deprivation, drug effects, or neurological disorders (e.g., Parkinson’s disease) can alter facial expressions.

Social Factors:

Unintentional Signaling: Individuals automatically convey social signals into expressions based on their past experience, cultural and social norms, which can vary significantly from one context to another.

Intentional Signaling: The ability to manipulate facial muscles effectively, a skill that can range from involuntary expressions to trained, actor-level control.

Environmental Factors:

Reactions to Surroundings: Facial responses to environmental stimuli, such as squinting in bright light or reacting to temperature changes.

Engagement in Activities: Actions such as speaking, eating, or any physical exertion can temporarily affect facial expressions.

A person attempting to show his teeth and raise his eyebrows with Bell's palsy—a type of facial paralysis, often a symptom of a stroke or brain tumor

For AI researchers, facial expressions represent a valuable dataset, particularly when encoded with tools like FACS. These units, while simplifying complex muscle movements, offer a reliable method for categorizing facial expressions.

Utilizing such encodings could significantly enhance the capabilities of generative models, enabling them to generate a wider array of facial expressions that move beyond common stereotypes.

However, it's important to recognize that these encodings are merely one component of a larger picture. Comprehensive understanding of human behavior requires the integration of facial expressions with other diverse data sources within a multi-dimensional analytical model. Sole reliance on facial expressions, especially static, will provide an incomplete narrative, like to attempting to grasp a movie's storyline from a single snapshot.

It's all about blending different kinds of datapoints—biometrics tell us about the physical side, cognitive data gives insight into the thought processes, environmental factors show the context, and social data helps normalize the results.

But what about emotions? How can these insights help me handle this type of data?

Predict only things you can get ground truth about

We've shifted from trying to pin down emotions to focusing on what businesses really need: actionable insights based on observable behaviors.

And this is exactly where large language models (LLMs) come into their element, showcasing their strength in navigating complex environments.

It turns out that while emotions are hard to quantify, behaviors like deceitfulness can be measured by language models more directly. There's plenty of data out there from real-life observations, showing how people act under certain conditions, that could be used as ground truth. For example, there are tons of videos where people get caught lying in court.

Here are some examples of the behaviors:

Deceitfulness

Impulsive Decision-Making

Intensive Cognitive Load

Hostile Social Interactions

Mania-affected Decisions

Attention Deficit

Fatigue

Satisfaction

Anxiety

This strategy opens up a world of applications:

In education, adapting teaching methods to fit a student's focus and energy can make learning more effective.

In wellness, tailoring meditation or therapy to an individual's current state can offer more personalized support.

In social spaces, identifying negative behaviors can create safer environments.

In professional settings, improving negotiations, acting and empathy to enhance communication skills.

By focusing on actions rather than emotions, we're able to provide businesses with clear, actionable data that has a real impact.

Use tools to understand context and measure biomechanics

Before we even start analyzing facial expressions, we first take a step back to consider the external factors that might be influencing the picture. Is the person squinting because they're in bright sunlight? Is there something else happening around them that might be affecting their expression? Or perhaps they're in the middle of eating, which could explain the expression we're seeing.

To get to the bottom of complex behaviors like deceit, we've developed a comprehensive set of tools to monitor a wide range of physiological signals: from heart rate variability and blood pressure to eye movements, pupil size, voice modulation, speech pace, and the positioning of facial muscles, among others.

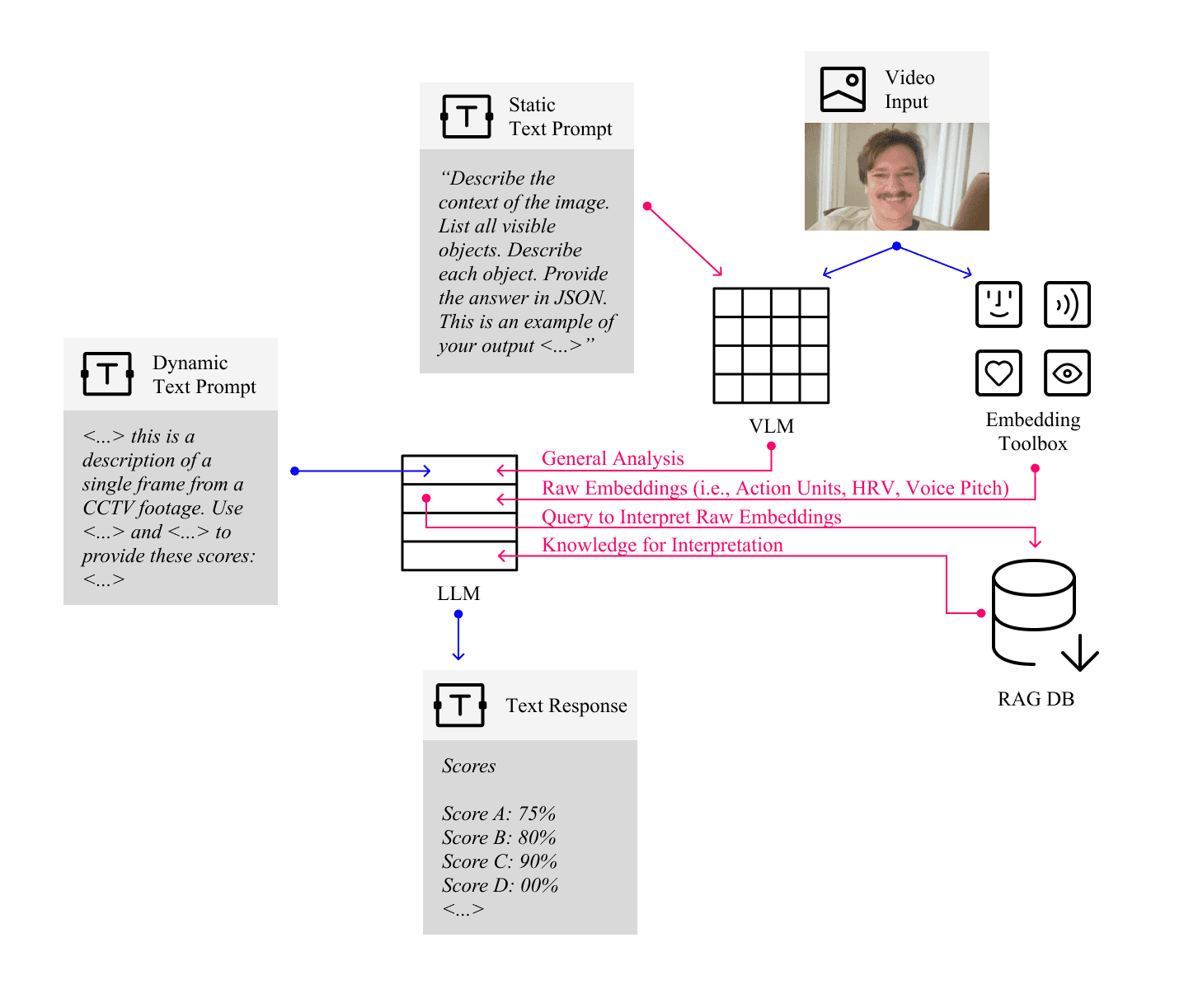

Creating our own set of tools was essential to meet the high standards we set for ourselves, and it's been quite the journey. Our system is powered by a Visual Language Model (VLM) that acts as the brain, supported by a Retriever-Augmented Generation (RAG) system filled with scientific insights.

As for the performance of our model, we're excited about the results and plan to share them soon. Keep an eye out for our upcoming publication for all the details!

What’s next?

We're of the mindset that while AI isn't quite there yet with detecting emotions, it's making big strides in understanding human behavior through physical and biological data. The key to pushing this forward is having more experts in the field who truly understand mental states and their complexities.

Relying solely on facial expressions to gauge the basic six emotions seems a bit old-school and might not serve the needs of businesses well. It's time for a fresher approach. Given the talent and innovative research happening in this space today, we're optimistic about the advancements and positive impact this technology will have in the future.